All businesses want to succeed and so they are constantly looking for ways to improve the cost-efficiency of their operations. Big Data was expected to do this by “analyzing everything”, yet it didn’t happen this way. As a provider of DevOps services and Big Data solutions, IT Svit (https://itsvit.com/) knows how to combine DevOps and Big Data efficiently.

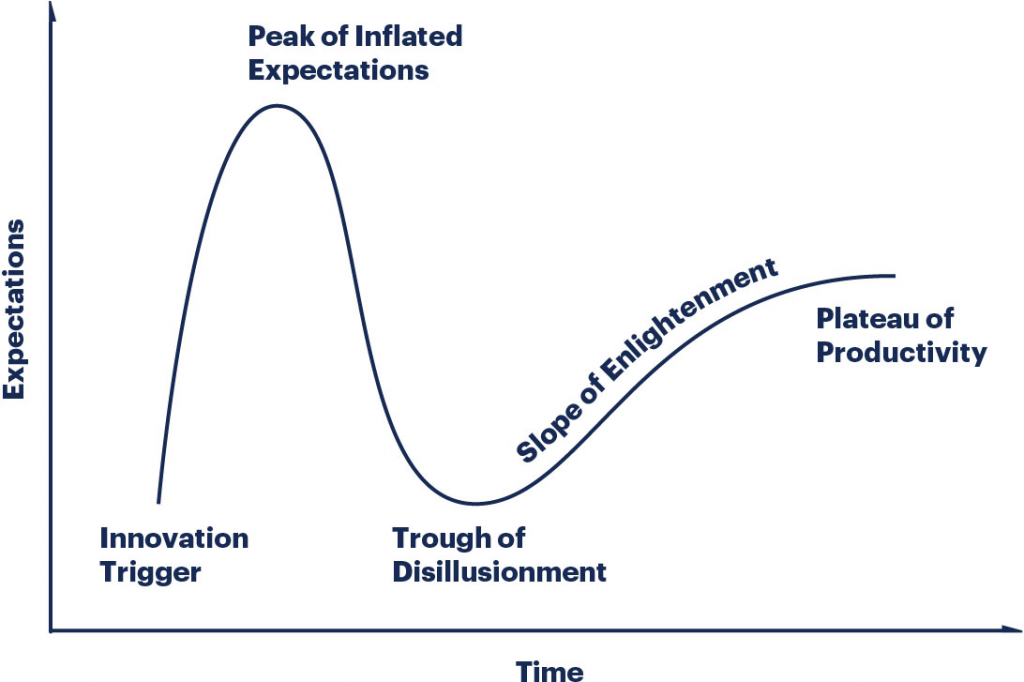

You are surely aware of the Gartner’s hype cycle, where a technology trigger leads to a peak of inflated expectations, which is followed by the trough of disillusionment.

Many companies hopped aboard Big Data hype train of 2015-2016, and most of them are still crawling among the debris of their ambitious Big Data projects. “Analyzing Everything” turned out to be very costly and not efficient, and “finding hidden patterns” within the goldmine of machine-generated data required such exorbitant amounts of computational resources, that the expenses far outweighed the benefits.

However, Managed Services Providers like IT Svit are currently on the slope of enlightenment, or even on the plateau of productivity. We paid attention to an old saying that “a dollar saved is a dollar earned” and learned to apply Big Data analytics to ensure cost-efficiency of DevOps operations at scale.

Let’s assume you have a big infrastructure spread across several geographically distant availability zones. Different parts of your infrastructure receive traffic at approximately the same time every day — but approximate does not cut it at scale. Thus said, you can use the historic data from server logs to train a Machine Learning model that will then track a variety of parameters like CPU usage, RAM usage, etc. You will also be able to set normal thresholds for all of these parameters, so when some parameter exceeds the threshold, the ML model informs the current DevOps engineer on shift. This way, instead of watching the dashboard restlessly, your DevOps staff will be informed once an incident occurs.

We might make another step and prepare several response scenarios based on possible incidents and outcomes. This way the model will not merely inform of the issue, but will also offer several solutions quickly. Once a DevOps engineer approves the best solution, the model increases its weight for this situation and will apply it in the future automatically. This helps save a ton of money when operating infrastructures at scale, where a minute of delay in adding more instances to handle the traffic spike can lead to millions in lost revenues.

Another way to combine DevOps and Big Data is to enable CI/CD processes for managing the ML models used for predictive analytics. The famous “Users who bought this have also bought…” from Amazon used this approach to create an index of relevancy across all their range of books on offer, thus greatly increasing cross-sales and upsell efficiency.

How to ensure everything works as intended though? This requires having an in-depth understanding of both the DevOps and Big Data methodologies, best practices and techniques. Luckily for you, quite a lot of companies worldwide have already successfully implemented this combination and can share their experience, while multiple Managed Services Providers like IT Svit stand ready to help you implement your project into life.

Therefore, all you need to do in order to get the most out of your Big Data is to find a reliable partner, able to combine DevOps and Big Data to help reach your business objectives. Don’t try to analyze everything, it does not work. Find exactly what you want to monitor, and enable automated monitoring for this system — this way you will get the most value out of it!